Paper on Cauchy–Schwarz Regularizers Accepted to ICLR 2025!

Sueda Taner and Ziyi Wang got their paper on Cauchy–Schwarz Regularizers accepted to the International Conference on Learning Representations (ICLR) 2025.

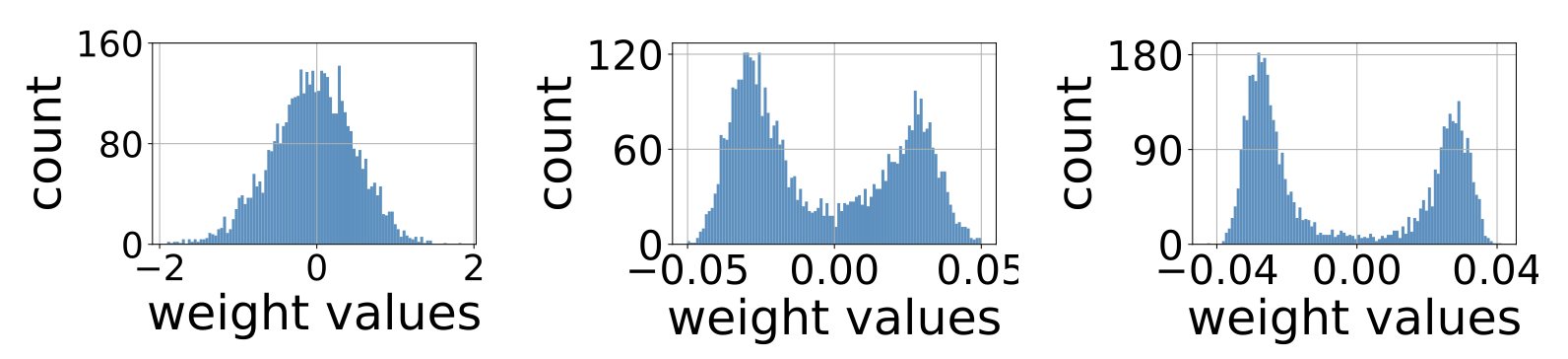

In “Cauchy–Schwarz Regularizers,” a novel class of regularization functions, called “Cauchy–Schwarz (CS) regularizers,”is introduced. These functions can be designed to induce a wide range of properties in solution vectors of optimization problems. To demonstrate the versatility of CS regularizers, regularization functions that promote discrete-valued vectors, eigenvectors of a given matrix, and orthogonal matrices are derived. The resulting CS regularizers are simple, differentiable, and can be free of spurious stationary points, making them suitable for gradient-based solvers and large-scale optimization problems. In addition, CS regularizers automatically adapt to the appropriate scale, which is, for example, beneficial when discretizing the weights of neural networks. To demonstrate the efficacy of CS regularizers, results for solving underdetermined systems of linear equations and weight quantization in neural networks are provided. Furthermore, specializations, variations, and generalizations leading to an even broader class of powerful regularizers are discussed.

The paper has been co-authored by Sueda Taner, Ziyi Wang, and Prof. Christoph Studer from the IIP Group and will be presented at the International Conference on Learning Representations in Singapore in April 2025. You can find a preprint of the paper on external page arXiv.